- Szerzők: Kilim Oz, Báskay János, Biricz András, Bedőházi Zsolt, Pollner Péter, Csabai István

- Megjelenés: 2024, BIOINSPIRATION & BIOMIMETICS

- URL: https://iopscience.iop.org/article/10.1088/1748-3190/ad6825

- MTMT azonosító: 35152435

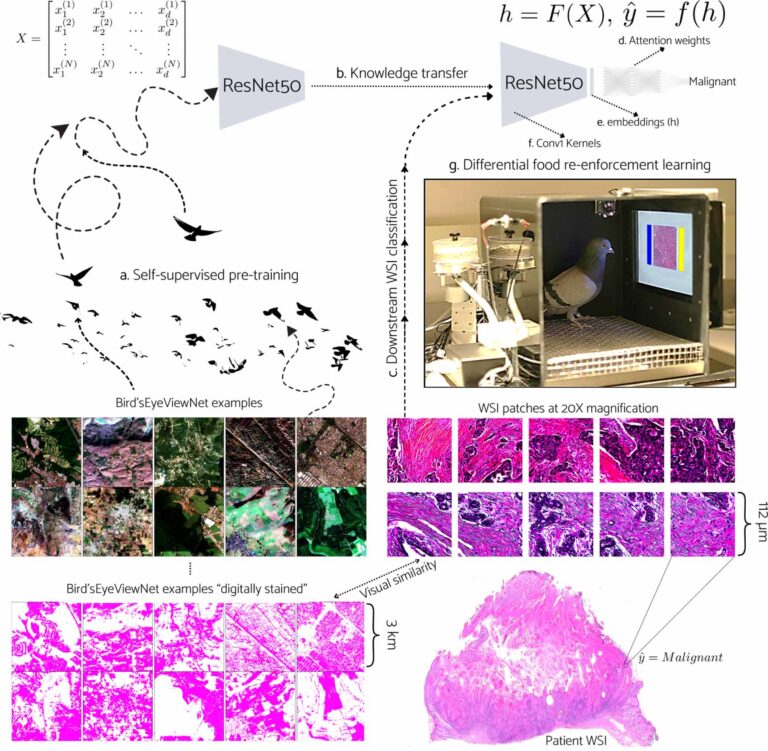

Absztrakt: Pigeons’ unexpected competence in learning to categorize unseen histopathological images has remained an unexplained discovery for almost a decade (Levenson et al 2015 PLoS One 10 e0141357). Could it be that knowledge transferred from their bird’s-eye views of the earth’s surface gleaned during flight contributes to this ability? Employing a simulation-based verification strategy, we recapitulate this biological phenomenon with a machine-learning analog. We model pigeons’ visual experience during flight with the self-supervised pre-training of a deep neural network on BirdsEyeViewNet; our large-scale aerial imagery dataset. As an analog of the differential food reinforcement performed in Levenson et al‘s study 2015 PLoS One 10 e0141357), we apply transfer learning from this pre-trained model to the same Hematoxylin and Eosin (H&E) histopathology and radiology images and tasks that the pigeons were trained and tested on. The study demonstrates that pre-training neural networks with bird’s-eye view data results in close agreement with pigeons’ performance. These results support transfer learning as a reasonable computational model of pigeon representation learning. This is further validated with six large-scale downstream classification tasks using H&E stained whole slide image datasets representing diverse cancer types.